Contents

- 1. AWS DeepRacer (2018 —, ongoing competition)

- 2. AIArena (2016 —, ongoing competition)

- 3. Coder One (2020—, ongoing competition)

- 4. Flatland (2019—, annual competition)

- 5. MineRL (2019—, annual competition)

- 6. NetHack (2020—, annual competition)

- 7. CompilerGym (2021—, leaderboard)

- Bonus: competition platforms and conferences

- Closing remarks

All blogs / 7+ Reinforcement learning competitions to check out in 2022

7+ Reinforcement learning competitions to check out in 2022

October 28, 2021 • Joy Zhang • Resources • 4 minutes

Reinforcement learning (RL) is a subdomain of machine learning which involves agents learning to make decisions by interacting with their environment. While popular competition platforms like Kaggle are mainly suited for supervised learning problems, RL competitions are harder to come by.

In this post, I've compiled a list of 7 ongoing and annual competitions which are suitable for RL. For AI competitions that are not necessarily tailored for RL, check out this list instead: 14 Active AI Game Competitions.

New to reinforcement learning? You might find these articles helpful:

1. AWS DeepRacer (2018 —, ongoing competition)

AWS DeepRacer is a beginner-friendly 3D racing simulator aimed at helping developers get started with RL. Participants can train models on Amazon SageMaker (first 10 hours are free) and enter monthly competitions in the form of an ongoing AWS DeepRacer League.

The AWS DeepRacer League is run in time trial format (although other challenges such as head-to-head racing exist). Top racers win prizes including merchandise, customizations, and an expenses-paid trip to Las Vegas to attend AWS re:invent for the Championship Cup. Participants can also win or purchase a physical 1/18th scale race car for USD399 to test their models in the real-world.

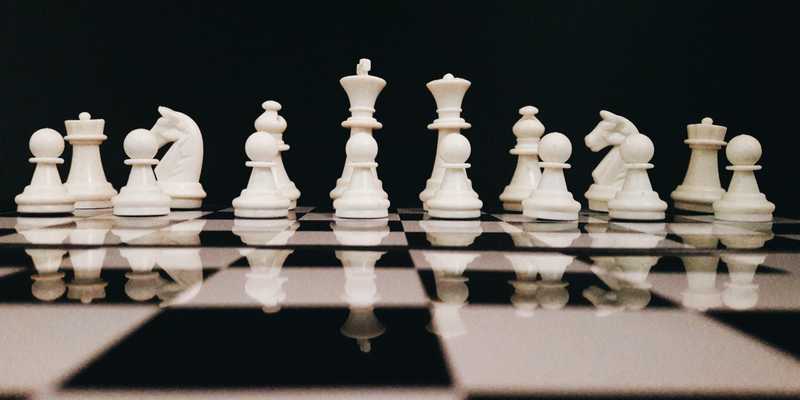

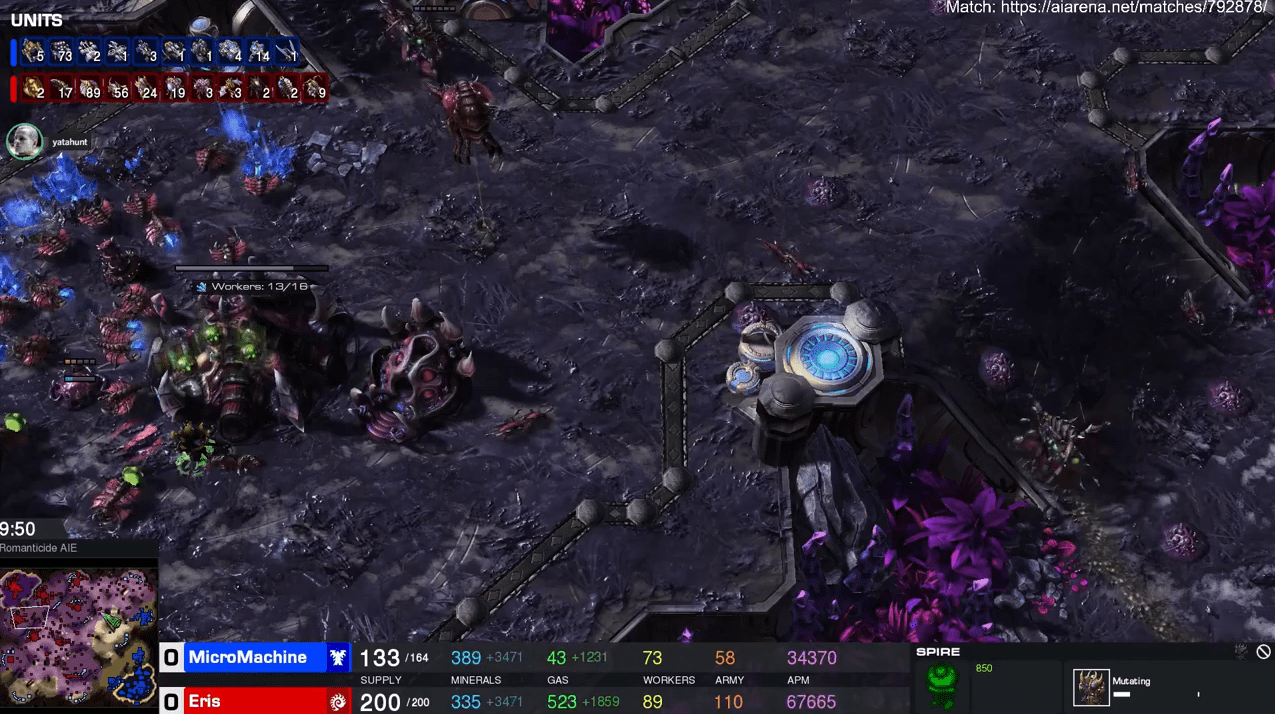

2. AIArena (2016 —, ongoing competition)

You might remember when AlphaStar reached Grandmaster status and beat two of the world's top StarCraft II players in 2019. StarCraft II was originally open-sourced in 2017 by Blizzard to accelerate AI research in highly complex environments.

You can still get involved with training deep RL agents in StarCraft II with the community at AIArena. They run an ongoing ranked ladder where you can compete head-to-head against other teams. Matches are 24/7 livestreamed to Twitch, with occasional community stream events.

For original StarCraft, you can also check out:

3. Coder One (2020—, ongoing competition)

Coder One is our own AI competition based on the classic console game, Bomberman. Participants build agents that navigate a 2D grid world collecting pickups and placing explosives to take down the opponent. Participants compete head-to-head against other teams and are ranked on a live leaderboard.

The game environment (called Bomberland) is challenging for out-of-the-box machine learning, requiring planning, real-time decision making, and navigating both adversarial and cooperative play.

The next competition runs between 1 — 14 September 2022, with registrations open now. Top teams have a chance to win from a $5,000AUD cash prize pool, job opportunities, and a feature on the finale Twitch livestream. It is free to create an account and program an agent for the game. To enter an agent into the competitive leaderboard, you will need to purchase a $10 season pass.

4. Flatland (2019—, annual competition)

Flatland is an annual competition featured as part of NeurIPS 2020. It is designed to tackle the problem of efficiently managing dense traffic on complex railway networks. The goal is to construct the best schedule that minimizes the delay in the requested arrival time of all trains.

The 2021 competition is currently being run on the AICrowd platform. Submissions are evaluated and ranked according to the total reward accumulated in a controlled setting. Prizes in previous years have included drones and VR headsets.

5. MineRL (2019—, annual competition)

MineRL is concerned with the development of sample-efficient deep RL algorithms which can solve hierarchical, sparse reward environments using human demonstrations in Minecraft.

Participants have access to a large imitation learning dataset of over 60 million frames of recorded human player data in Minecraft. The goal is to develop systems that can complete tasks such as obtaining a diamond, building a house, searching for a cave, etc.

The competition has been running as part of NeurIPS from 2019 — 2021 on AICrowd. Prizes include co-authorships and over $10,000 cash.

6. NetHack (2020—, annual competition)

NetHack is another annual competition at NeurIPS 2021 held on AICrowd. Teams compete to build the best agents to play NetHack, an ASCII-rendered single-player dungeon crawl game. NetHack features procedurally-generated levels, with hundreds of complex scenarios, making it an extremely challenging environment for current state-of-the-art RL.

Like Flatland and MineRL, submissions are ranked on a leaderboard based on score in a controlled test setting. The competition this year features a $20,000 USD cash prize pool. RL approaches are encouraged, but non-RL approaches are also accepted.

7. CompilerGym (2021—, leaderboard)

CompilerGym is actually a toolkit for applying reinforcement learning to compiler optimizations, rather than a competition. However, users can submit algorithms to the public repo leaderboard with their write-up and results.

Bonus: competition platforms and conferences

I prioritized competitions that are ongoing or run regularly for this list. Another good way to keep track of running competitions is to follow the competition platforms and conferences they are run as part of. Here's some worth keeping your eye on:

- AICrowd: Runs a combination of supervised ML competitions as well as RL competitions.

- Kaggle: Mainly supervised ML/data science competitions, but also feature simulation competitions which can be good problems for RL.

- NeurIPS: Annual conference with a competition track for various machine learning competitions.

- IEEE CoGs: Annual conference with a competition track, specifically for research in games.

Closing remarks

I hope this list has helped you find an interesting competition to check out and practise reinforcement learning in. As new competitions come and go, I'll aim to keep this list up-to-date. Good luck!